Machine Learning Operations – MLOps

Artificial intelligence (AI) and machine learning (ML) are key factors for business, helping companies to plan and personalise their products and to improve business operations across the globe.

Early concept made possible with technological advancement

The origin of artificial intelligence (AI) as a computer science field that aims to simulate every aspect of human intelligence by using computing machines dates to 1956, when John McCarthy et. al organised the Dartmouth Summer Research Project on Artificial Intelligence 1 . That workshop was the seed of a unified identity for the field and a dedicated research community that led to numerous breakthroughs during the subsequent decades 2 . Despite the rapid growth of different aspects of artificial intelligence, the field could still boast no significant practical success and nearly every computer program that was built to interact with humans was codified with a defined set of rules, only allowing rudimentary displays of intelligence in specific contexts and with capabilities limited to specific tasks. Ruled-based systems, also known as expert systems, cannot be designed to address complex problems where the programming of the set of rules that defines the body of knowledge is impractical or impossible.

It was not until the nineties that the AI focus started drifting from expert systems toward a new paradigm built upon the idea that programs should be able to learn, so that AI could start displaying its potential 3 . It is in this new paradigm where modern AI establishes its roots, and the field of machine learning (ML) is born, being the “field of study that gives computers the ability to learn without being explicitly programmed,” as stated by Arthur Samuel. Machine learning algorithms learn through data exposition, usually via an iterative process or an ensemble of statistical samplings of the data. A key feature of ML is that its predictions improve with experience and with the use of more relevant data, at least up to a certain point. Thus, by learning through practice, instead of following defined sets of rules, machine learning systems deliver better solutions than expert systems in numerous cases, and more complex problems start being accessible.

However, the advent of machine learning could not be possible without the development of two key elements. First, the recent progress of the hardware computing resources, such as the continuous increase of the number of transistors on microchips following Moore’s law, and more recently, the use of graphical processing units (GPU). Secondly, the availability of a large amount of data, primarily driven by the invention of the World Wide Web and mobile technology. By the end of 2020, 44 zettabytes were stored on the cloud, and it is estimated that this will increase up to 200+ zettabytes by 2025 4 . These factors have led to unprecedented progress in statistical models, algorithms and applications that have brought AI and ML into the limelight. Thus, AI solutions are already being applied in virtually every industry with excellent results. Some distinguished examples are automated medical diagnosis, voice input for human-computer interaction, intelligent assistants, AI-based cybersecurity and self-driving cars.

Catalyst for digital business

70% of the globe’s GDP will have gone through some form of digitisation by 2022, and by 2023, investments in Direct Digital Transformation will amount to $6.8 trillion 5 . As companies are undergoing their journey of digitalisation today, the use of ML is a key feature in automating, predicting, planning and personalising their product. However, the integration of ML within the business chain comes with new challenges that have a tremendous impact on business. Some of the questions that companies are nowadays facing are not related to how to build ML models, but rather to which built models are in use, what they are doing and whether the used data reflects the state of the world. Although the answer to these questions might seem simple when compared with the complexity of the ML algorithms, they are usually overlooked, bearing negative effects on business 6 . To align models with business needs and to generate business value, it is therefore essential not only to build the ML model but also to deal with dataset management, monitoring and deploying models and building processes that are shareable and repeatable throughout an organisation. To provide a solution to those issues, a set of best practices have been codified into a new field, Machine Learning Operations, or MLOps.

Therefore, MLOps is a set of practices for the operationalisation of ML models that aim to build, deploy and monitor ML applications and that facilitates the collaboration and communication between data scientists and operations professionals quickly and reliably. Some of the MLOps capabilities are 7 :

- MLOps allows the unification of the release cycle for machine learning and software application releases.

- MLOps enables automated testing of machine learning artefacts, e.g., data validation, ML model testing and ML model integration testing.

- MLOps enables the application of agile principles to machine learning projects.

- MLOps enables supporting machine learning models and datasets to build these models as first-class citizens within CI/CD systems.

- MLOps reduces technical debt across machine learning models.

- MLOps must be a language-, framework-, platform-, and infrastructure-agnostic practice.

Therefore, MLOps is a set of practices for the operationalisation of ML models that aim to build, deploy and monitor ML applications and that facilitates the collaboration and communication between data scientists and operations professionals quickly and reliably. Some of the MLOps capabilities are 7 :

- MLOps allows the unification of the release cycle for machine learning and software application releases.

- MLOps enables automated testing of machine learning artefacts, e.g., data validation, ML model testing and ML model integration testing.

- MLOps enables the application of agile principles to machine learning projects.

- MLOps enables supporting machine learning models and datasets to build these models as first-class citizens within CI/CD systems.

- MLOps reduces technical debt across machine learning models.

- MLOps must be a language-, framework-, platform-, and infrastructure-agnostic practice.

Seven core principles

According to Microsoft’s Machine learning DevOps guide 8 , seven core principles should be considered when adopting MLOps for any ML-based projects:

- version control code, data, and experimentation output – to ensure reproducibility of experiments and inference results

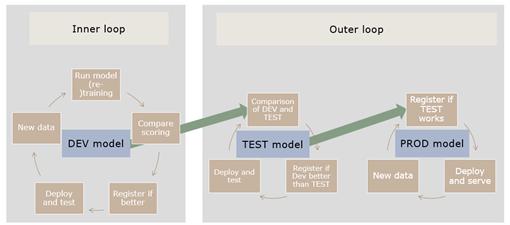

- use multiple environments – to segregate development and testing from production work, as shown below.

- manage infrastructure and configuration with infrastructure-as-code – for consistency between environments

- track and manage machine learning experiments – for quantitative analysis of experimentation success and to enable agility

- test code, validate data integrity, model quality – to test the experimentation code base

- machine learning continuous integration and delivery – to ensure that only qualitative models land in production

- monitor service, models, and data – to serve machine learning models in an operationalised environment

It is worth mentioning that the interpretation of these Microsoft principles should be flexible, i.e., they are not a set of rules that must be adopted when designing an ML project. Specifically, the second core principle, use multiple environments, can be omitted most of the time during development, even though it is relevant to follow it for functional testing of applications and APIs. As an illustration, in our solution, we adopt only a reduced set of those principles.

In this work, a proposed MLOps solution is presented in terms of infrastructure configuration, data preprocessing workflow and end-to-end model development workflow. The aim is to showcase how MLOps principles can be brought into practice using cloud computing resources from Azure. The proposed MLOps solution is based on the above-mentioned core principles, where the focus in this paper is on principles 1,3,4 and 6.

Proposed MLOps solution

The core components of the proposed MLOps solution can be summarised in terms of infrastructure configuration, data preprocessing workflow and end-to-end model development workflow, which will be presented below.

Infrastructure configuration

- Infrastructure-as-code: terraform

- Code repository: Azure DevOps repo

- Data repository: Azure data lake with a Delta Lake storage layer

- Model repository: MLflow

- Model hosting server: Azure Pipeline Docker

Data preprocessing workflow

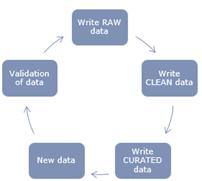

- Data preprocessing workflow illustrated

- Validation of new data

- Writing data to the Delta Lake RAW layer

- Writing data to the Delta Lake CLEAN layer

- Writing data to the Delta Lake CURATED layer

- Data lake storage structure

New data is fetched by ETL pipelines based on time scheduling, which is a story of its own. Before the new data enters the data preprocessing workflow, the quality needs to be validated. In this solution, data quality is proposed to be measured based on knowledge obtained from previous stored data, and validated through:

- ensuring that the new data is not identical to the latest stored data

- ensuring that the new data has same features/columns as the latest stored data

- ensuring that the new data has the same data significance compared to the stored data through hypothesis testing, with the added significance of the stored data as a NULL hypothesis, using Great Expectations 9 .

If any step in the validation fails, a webhook, built using Pysteams 10 notifies a teams channel with information regarding the validation fail. Also, the data preprocessing workflow is being terminated.

When new data has passed validation, it is ready to be stored in the Delta Lake RAW layer, i.e. stored as a delta table in the data lake RAW-data folder. The aim of the RAW layer is to have version control of the source data without any processing.

Data from the Delta Lake RAW layer is cleaned through casting column names and types as well as dropping sensitive information, such as personal data. The cleaned data is then written to the Delta Lake CLEAN layer, i.e. stored as a delta table in the data lake CLEAN-data folder. The aim of having the CLEAN layer is to have version control of data ready to be used for any purpose, simultaneously staying as close as possible to the original source data. No use-case specific processing is performed.

Cleaned data from multiple Delta Lake CLEAN layers can be joined or merged for a specific purpose at this step and written to the Delta Lake CURATED layer, i.e. stored as a delta table in the data lake CURATED-data folder. The aim of the CURATED layer is to have version control of joined or merged data for a specific purpose.

data_lake_storage_account

|__ data_lake_file_system

|__ source_data_folder

|__ source_file (Source data)

|__ RAW_data_folder (RAW layer)

|__ delta_table (parquet format)

|__ CLEAN_data_folder (CLEAN layer)

|__ delta_table (parquet format)

|__ CURATED_data_folder (CURATED layer)

|__ delta_table (parquet format)

The phrasing “layer” is commonly used in MLOps to indicate data versioning checkpoints for data with different processing levels. The proposed solution suggests having RAW, CLEAN and CURATED layers of data with the definition given in this summary. Another popular layer definition is the BRONZE, SILVER and GOLD layers definition 11 . Which one to pick is up to the developers, as long as the definitions are clear to the whole developer team.

End-to-end model development workflow

- Workflow illustration

- Run model (re-)training

- Put model in staging

- Comparison of staging model and production model

- Move staging model to production

- Model run-time deployment

When data has been curated to the Delta Lake curated layer for a specific purpose, it’s time for model (re-)training. A model pipeline is constructed at this step, which consists of several data preprocessing steps and a final predictor step. In this solution, the following actions are carried out for model (re-)training:

- Define data preprocessor steps using sklearn pipelines 12 , for example, selecting columns, removing outliers and performing feature engineering.

NOTE: The reason that these preprocessor steps are performed in the model-pipeline (and not in the curated layer stage) is due to the explorative-nature of ML. For the same purpose, for example, time series forecasting, the preprocessor pipeline steps for different models can look very different. Therefore, the preprocessor-pipeline steps should be bound to a model rather than a purpose – data in the curated layer are curated for a purpose.

- Specify train, validation and test datasets by splitting data in the Delta Lake curated layer into training, validation and test data, and fit the preprocessor-pipeline to the training data.

- Define the parameters for optimization and the objective function, and perform hyperparameter optimisation (using Hyperopt 13 ) using the preprocessed training data

- Add the model with best parameters to the model-pipeline as the final predictor step.

- Run the validation data through the model-pipeline Log the best model and all necessary parameters/metrics and data versions in MLflow tracking for experimentation version control.

When a new model has been (re-)trained, it should be registered to the model repository in MLflow. The aim with model registry is to have version control of model development experiments. Based on the metric logs from the corresponding experiment, it is decided whether the new model should be pushed into staging. If the new model passes the defined performance tests, for example, metrics thresholds, the previous staged model is archived, and the new model is transitioned into staging. A notification is sent to the developer team regarding a new model being put into staging.

The aim of having models in archived, staging and production stage is to clarify a model’s role in the model repo. A production model is the best model in the current environment, a staging model is the challenger model that will later challenge the production model and an archived model is a model that has been replaced.

When a new model has been pushed into staging, model performance comparison needs to be carried out between the staging model and the production model, to decide whether the staging model should replace the production model. The model performance is validated on the test data, and the resulting metrics are summarised for the data scientist to make a decision.

Decided by the data scientist, if the staging model outperforms the production model, the production model is being archived and the staging model is being pushed to production. A notification is sent to the developer team regarding a new model being put into production.

When a new model has been pushed to production, the building environment will be notified that a new production model is available. The production model is fetched from the MLflow model registry to a model API, where a test suite is run on the API to ensure that the application is good. A docker container is then built using Azure pipelines, and the model API is being exposed to a port and deployed to cloud.

Verdict

This work proposes an MLOps solution for scalable and repeatable end-to-end ML implementation. The solution is set up using Infrastructure-as-code and contains semi-automated steps for data preprocessing and end-to-end machine learning development workflows. As noticed throughout the development, MLOps increases the quality, simplifies the management process and automates the deployment of ML models in a large-scale production environment. Thus, it becomes easier to align models with business needs.

MLOps creates a wide array of benefits, namely:

- MLOps orchestrates the entire development process

- MLOps monitors data drifting

- MLOps leverages agile methods

- MLOps promotes truly reusable components

- MLOps versions both data and models

- MLOps alleviates comparison between models and artifacts

In contrast, there are also some limitations worth mentioning, such as a lack of a common definition of data within the data pipeline, and the dependence on certain tech stacks that limit the generalisation of certain procedures.

All in all, MLOps is a set of principles for establishing a common working framework when implementing ML solutions to meet business operationalisation needs. However, the practical implementation of MLOps depends specifically on each case. Experiment with it and pick the solution that fits your business needs the best.

Footnotes

- 1. McCarthy, J., Minsky, M. L., Rochester N. & Shannon, C. E. (2006). A proposal for the Dartmouth summer research project on artificial intelligence. AI Magazine, 27(4), 12-14. https://doi.org/10.1609/aimag.v27i4.1904 a↩

- 2. Nilsson, N.J. (2009). The Quest for Artificial Intelligence. Cambridge: Cambridge University Press. https://doi.org/10.1017/CBO9780511819346 a↩

- 3. Stone, P., Brooks, R., Brynjolfsson, E., Calo, R., Etzioni, O., Hager, G., Hirschberg, J., Kalvanakrishnan, S., Kamar, E., Kraus, S., Leyton-Brown, K., Parkes, D., Press, W., Saxenian, A., Shah, J., Tambe, M., & Teller, A. (2016). Artificial Intelligence and Life in 2030. One Hundred Year Study on Artificial Intelligence: Report of the 2015-2016 Study Panel. Stanford University. Retrieved September 6, 2016, from http://ai100.stanford.edu/2016-report a↩

- 4. Bulao, J. (2022, June 3). How Much Data Is Created Every Day in 2022? Techjury. https://techjury.net/blog/how-much-data-is-created-every-day/ a↩

- 5. Information Overload Research Group. (n.d.). Information Overload Research Group. https://iorgforum.org/ a↩

- 6. Algorithmia. (2019). 2020 state of enterprise machine learning. Algorithmia. https://info.algorithmia.com/hubfs/2019/Whitepapers/The-State-of-Enterp… a↩

- 7. Visengeriyeva, L., Kammer, A., Bär, I., Kniesz, A., & Plöd., M. (n.d). ML Ops: Machine Learning Operations. https://ml-ops.org/ a↩ b↩

- 8. Microsoft. (2021, November 10). Machine learning DevOps guide - Cloud Adoption Framework. https://docs.microsoft.com/en-us/azure/cloud-adoption-framework/ready/a… a↩

- 9. Github. (2022). Great Expectations. https://github.com/great-expectations a↩

- 10. Veach, R. (2022). pymsteams. Python Software Foundation: Python Package Index. https://pypi.org/project/pymsteams/ a↩

- 11. Lee, D., & Heintz, B. (2019, August 14). Productionizing Machine Learning with Delta Lake. Databricks: Engineering Blog. https://databricks.com/blog/2019/08/14/productionizing-machine-learning… a↩

- 12. Scikit-learn developers. (2022). Sklearn.pipeline.Pipeline. Scikit-learn. https://scikit-learn.org/stable/modules/generated/sklearn.pipeline.Pipe… a↩

- 13. Bergstra, J., Yamins, D., & Cox, D. D. (2013). Making a Science of Model Search: Hyperparameter Optimization in Hundreds of Dimensions for Vision Architectures. To appear in Proc. of the 30th International Conference on Machine Learning (ICML 2013). http://hyperopt.github.io/hyperopt/ a↩